![DevOps-10: Continuous Orchestration [Kubernetes]](http://www.techntuts.com/public/default-image/default-730x400.png)

DevOps-10: Continuous Orchestration [Kubernetes]

DevOps Tutorial Outline:-

- DevOps-1: Introduction to DevOps

- DevOps-2: Learn about Linux

- DevOps-3: Version Control System [Git]

- DevOps-4: Source Code Management (SCM) [Github]

- DevOps-5: CI/CD [Jenkins]

- DevOps-6: Software and Automation Testing Framework [Selenium]

- DevOps-7: Configuration Management [Ansible]

- DevOps-8: Containerization [Docker]

- DevOps-9: Continuous Monitoring [Nagios]

- DevOps-10: Continuous Orchestration [Kubernetes]

DevOps-10: Continuous Orchestration [Kubernetes] - Outline

- 10.1: Learning Objectives

- 10.2: Container Orchestration

- 10.3: Kubernetes Overview

- 10.4: Kubernetes Components

- 10.5: Kubernetes Architecture

- 10.6: Kubernetes Installation

- 10.7: Kubernetes Basics - Part One

- 10.8: Kubernetes Basics - Part Two

- 10.9: Kubernetes Networking and Storage

- 10.10: Kubernetes Configuration

- 10.11: Interacting with a Kubernetes Cluster

- 10.12: Demo - Exploring a Kube Cluster

- 10.13: Practice Project

10.1. Learning Objectives

10.2. Container Orchestration

Container Orchestration automates the deployment, management, scaling and networking of containers. It is useful for the enterprises to deploy and manage multiple containers and hosts.

Purposes of using Container Orchestration:

- A container orchestrator automatically deploys and manages containerized apps.

- It responds dynamically to changes in the environment.

- It ensures all deployed container instances get updated if a new version of a service is released.

- Dynamically respond to changes

- Deploy the same application across different environments.

Container Orchestration is essential to automate and manage tasks such as:

How does container Orchestration Work?

The configuration of an application is described using either a YAML or JSON file. The file specifies where to find the container images, how to establish a network, and where to store logs. While deploying a new container, the orchestration tool automatically schedules the deployment to a cluster. Container Orchestration then manages the container’s life cycle based on the specifications in the config files.

Container Orchestration Tools:

Container orchestration tools provide a framework for managing containers and microservices architecture at scale. They simplify container management, and provide a framework for managing multiple containers as one entity. Some popular tools used for container lifecycle management are:

10.3. Kubernetes Overview

Kubernetes is a powerful open-source orchestration platform designed to manage containerized applications. It aims to provide better ways of managing related distributed components and services across varied infrastructure. It is also known as k8s or “kube”. Kubernetes was originally developed by Google and then acquired by Cloud Native Computing Foundation (CNCF). It acts as a cloud service in major cloud providers such as EKS in AWS and Kubernetes Engine in GCP.

Features of Kubernetes:

Benefits of Kubernetes:

10.4. Kubernetes Components

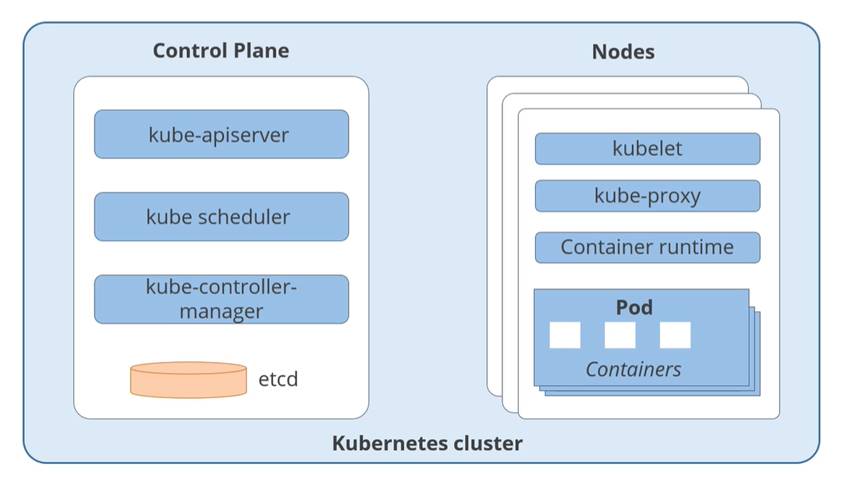

Components of Kubernetes Cluster:

Control Plane Components: The control plan’s components make global decisions about the cluster.

- Kube-apiserver: It is designed to scale horizontally by deploying more instances. You can run several instances of kube-apiserver and balance traffic between those instances.

- Kube scheduler: It watches for newly created Pods with no assigned node and selects a node for them to run on.

- Kube-controller-manager: It runs controller processes. Each controller is a separate process, but to reduce complexity.

- Etcd: It is a consistent and highly-available key value store used as Kubernetes backing store for all cluster data.

Cloud-controller-manager: It helps when you want to run Kubernetes with a specific cloud provider.

- It incorporates cloud-specific control logic.

- It only runs the controllers that are specific to your cloud provider.

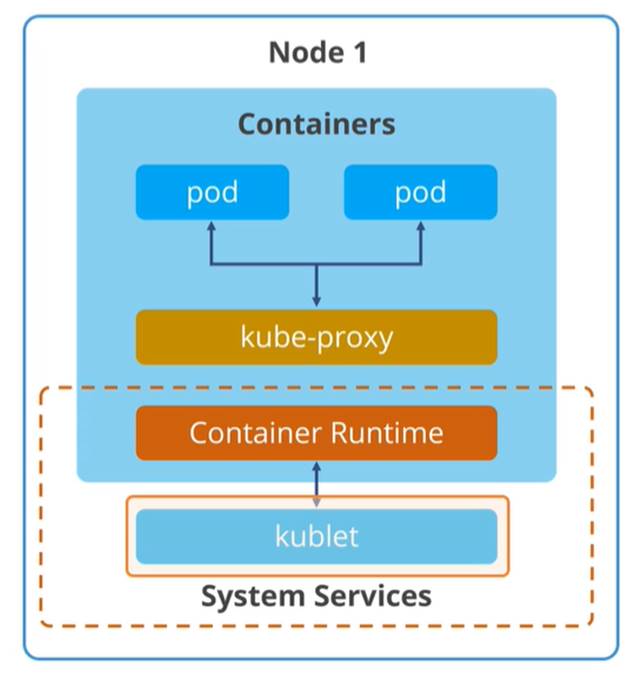

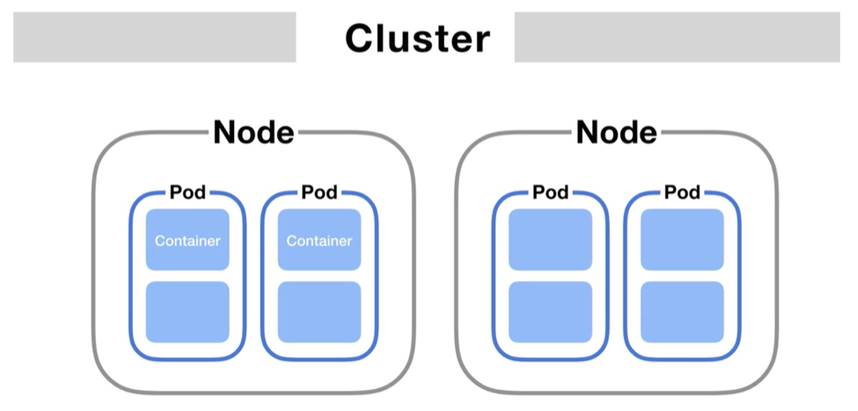

Nodes: A Kubernetes cluster needs at least one compute node, but we’ll normally have many. Pods are scheduled and orchestrated to run on.

- Kubelet: It is an agent that makes sure the containers are running in a Pod.

- Kube-proxy: It implements a network proxy and acts as a load balancer in a Kubernetes cluster. It also helps in redirecting traffic to a specific container in a pod, based on the incoming port and IP detais.

- Container runtime: The container runtime run containers on a Kubernetes cluster. It is responsible for fetching, starting, and stopping container images.

- Pod: A pod is the smallest and simplest unit in the Kubernetes object model. It represents a single instance of an application. It is the components of the application workload that runs on the worker node.

10.5. Kubernetes Architecture

Kubernetes brings together individual physical or virtual machines into a cluster using a shared network to communicate between each server. This cluster is the physical platform where all Kubernetes components, capabilities, and workloads are configured.

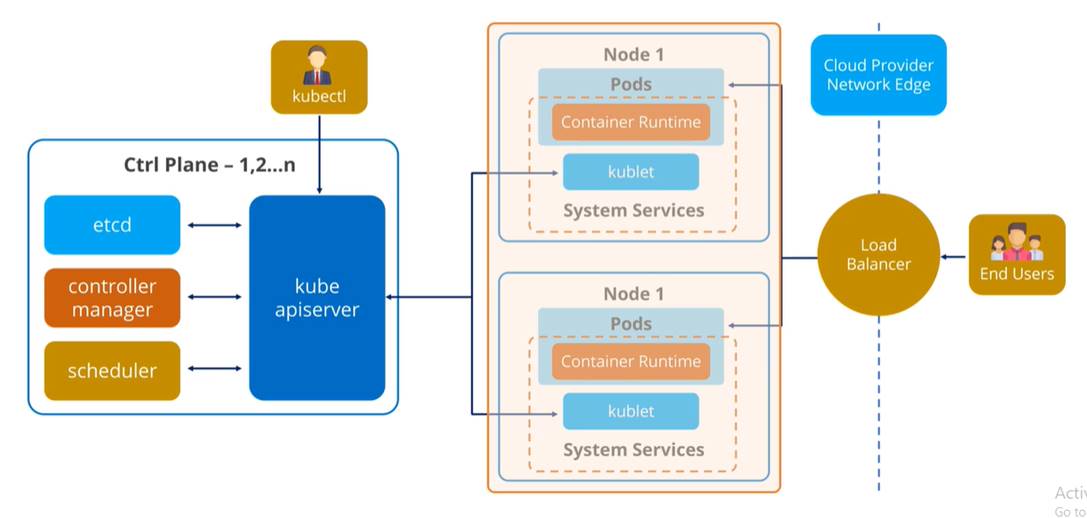

Kubernetes Architecture consists of control plane, nodes, and pods.

Control plane: The control plane is the system that maintains a record of all Kubernetes objects. It continuously manages object states responding to changes in the cluster. It also works to make the actual state of system objects match the desired state. All the components of the control plane can run on a single master node or it can be replicated across multiple master nodes for high availability

Control plane: Cluster nodes are machines that run containers and are managed by the master nodes. The kubelet is the primary and most important controller in Kubernetes that responsible for driving the container execution layer, typically docker.

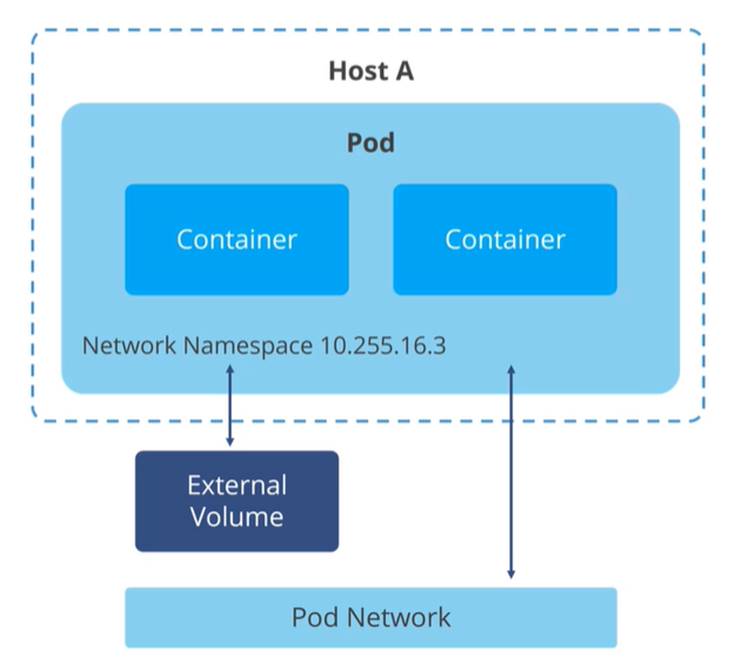

Pods: It is one of the crucial concepts in Kubernetes, as they are the key construct that developers interact with. It packages up a single application, which can consist of multiple containers and storage volumes.

10.6. Kubernetes Installation

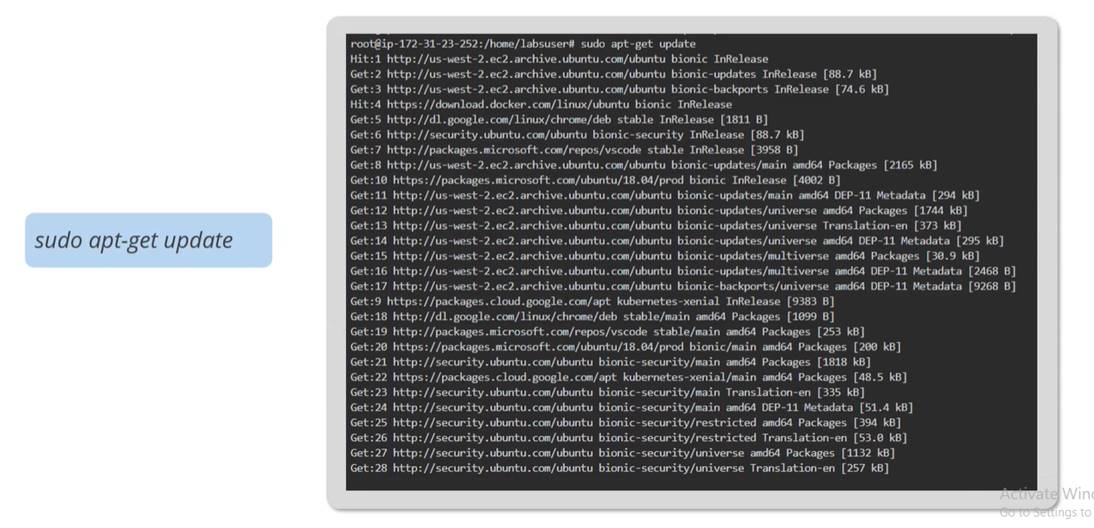

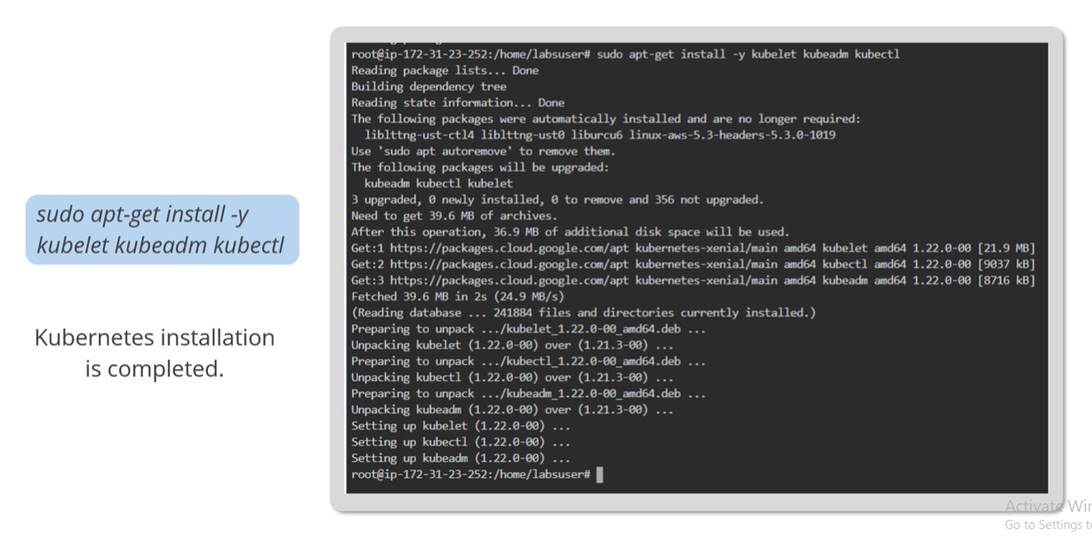

Before install Kubernetes, you must be installed Docker.

Steps to install Kubernetes:

- Add the key and download it.

- Update the apt-get package

- Install the Kubernetes and the tools required to manage it.

10.7. Kubernetes Basics - Part One

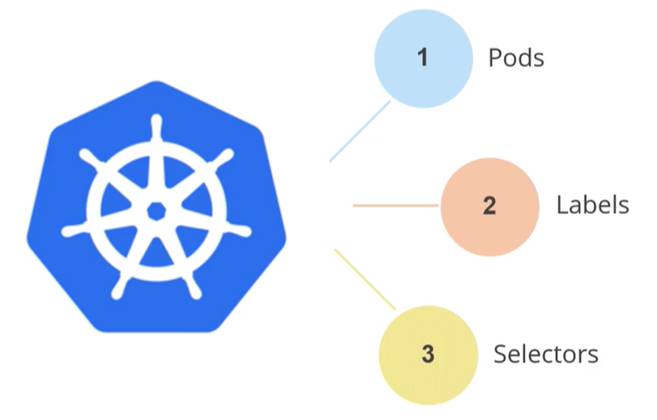

The basic component of Kubernetes are:

- Pods: The Pod is a collection of one or more containers with shared storage and network resources and a specification to run its containers. It is the smallest unit of Kubernetes application.

Features of Pods:

- Pod containers can contain multiple apps.

- Pod templates define the process of creating and deploying pods.

- Pods share the physical resources of the host machine.

How Pods can be used?

There are two ways of using pods in a Kubernetes cluster.

- Pods running on a single container

- Pods running on multiple container

- Labels: Labels are the key value pairs that Kubernetes attaches to various object. These are used to organize and select subsets of objects.

Example of Labels:

“release” : “stable” “tier” : “frontend” “track” : “daily” “partition” : “customer A”

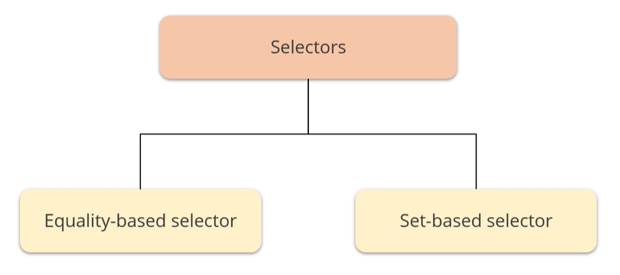

- Selectors: The selector are the core grouping primitives. These are used to select a group of objects.

Types of Selector: The Kubernetes API currently supports two types of selectors,

- Equality-based selector: It use label keys and values to allow filtering.

- Set based selector: It allow filtering keys according to a set of values.

- Equality-based selector: It use label keys and values to allow filtering.

10.8. Kubernetes Basics - Part Two

The components of Kubernetes are,

- Controllers: Controllers are control loops that monitor the state of your Kubernetes cluster and make a request changes wherever needed.

Features of controller:

- Controllers track at least one Kubernetes resource type.

- Controllers are responsible for making the current state come closer to those desired states.

- Controllers take care of the availability of the pod.

- Controllers manage pods using labels and selectors.

Types of Controllers:

- Replica Set: A Replica Set ensures that a set of replica pods is running at any given time.

Required for Replica Set:

- The Replica Set uses the selector to identify the pods running.

- It acquires the pod if the pod doesn’t have an owner reference.

Example of Replica Set:

When to use Replica Set:

- Deployments: Deployment is a controller that transfers the current state into the intended state.

Why deployment is used?

- Clean up older replica sets

- Roll out a replica set

- Declare the new state of pods

- Indicate that a rollout is stuck

- Apply multiple fixes to pods

- Facilitate more load

Deployment Operations are:

- Creating

- Scaling

- Updating

- Cleaning

- Rolling

- Pausing and Resuming

-

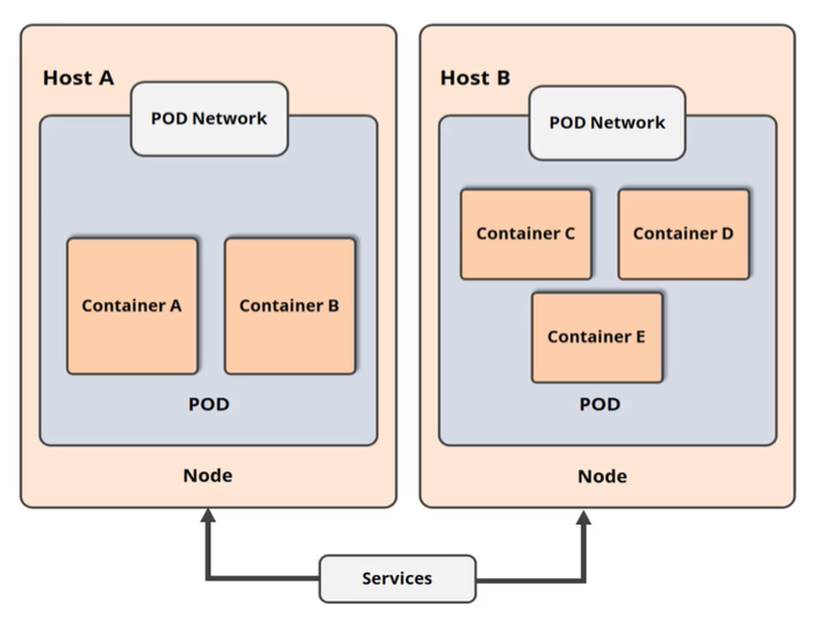

Services: A service is an abstraction that defines a logical set of pods as well as a policy for accessing them. These are defined in YAML.

How do service work?

A Kubernetes service allows internal and external users of your app to communicate with:

- Nodes

- Pods

- Users

Accessing a Kubernetes Service:

- DNS method: when a new service is established, the DNS server watches the Kubernetes API and it name become available for a quick resolution for requesting apps.

- Environment variable: The kubelet adds environment variables for each active service for each node a pod is operating on it this approach.

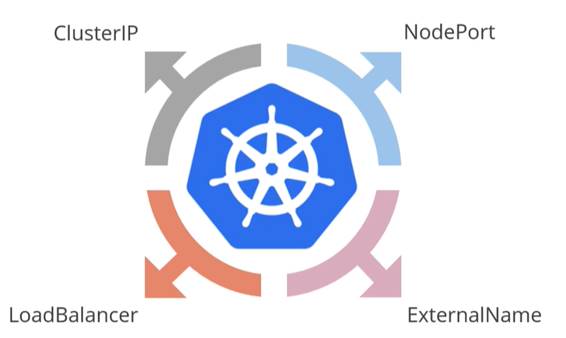

Service Types:

- ClusterIP: the default service type exposes the service within the Kubernetes cluster.

- NodePort: a static port exposes the service on each node’s IP.

- LoadBalancer: a cloud provider’s load balancer exposes the service externally.

- ExternalName: return a C name record with the value for mapping a service to a perset external name field.

10.9. Kubernetes Networking and Storage

Kubernetes Networking is a service that enables Kubernetes components to connect with one another.

The main concerns of Kubernetes networking are:

- Containers in a pod, communicating via loopback utilizing networking.

- Services exclusively published for use within your cluster.

- Cluster networking that allows communication across distinct pods.

- The service resource that allows you to expose a pod-based application.

Kubernetes Storage: Kubernetes provides a convenient persistent storage mechanism called Persistent volumes. Persistent Volume – In the Kubernetes storage architecture, volumes are a crucial abstraction.

How can we keep storage resource consumption to a minimum?

Container storage usage should be limited to represent the amount of storage available in the local data center or the budge provided for cloud storage service. The two ways of limiting storage consumption by containers are:

- Resource Quotas: Resource Quotas which limits the amount of resources, including storage, CPU and memory, that can be used by all containers within a Kubernetes.

- Storage Classes: Storage Classes which limits the amount of storage provisioned to containers in response to a PresistentVolumeClaim.

Kubernetes storage best practice,

- Persistent volume settings

- Resource quotas

- Service definition quality

10.10. Kubernetes Configuration

Kubernetes uses two sorts of objects to inject configuration data into it, which are:

Secrets: Secrets are little pieces of data, such as a password, a token, or a key that contain a small amount of sensitive information.

ConfigMap: A ConfigMap is an API object used to store non-confidential data in key-value pairs.

ConfigMap can be used by pods as:

- Environment variable

- Command-line parameters

- Volume configuration file

Configuration best practices:

- Update Kubernetes to the latest version

- Use Pod Security Policies

- Use Kubernetes namespace

- Use Network Policies

- Configure the Kubernetes API server security

- Maintain Small container Image

10.11. Interacting with a Kubernetes Cluster

To manage your cluster, Kubernetes provides kubectl, a command-line tool.

To transmit instructions to the cluster’s control plane, use kubectl, and to receive information about all Kubernetes object, use the API server.

The syntax to run kubectl commands from the terminal window is:

kubectl [command] [TYPE] [NAME] [flags]

The arguments for the syntax are:

Command: Refers to the operation

TYPE: Refers to the resource type

NAME: Refers to the resource name

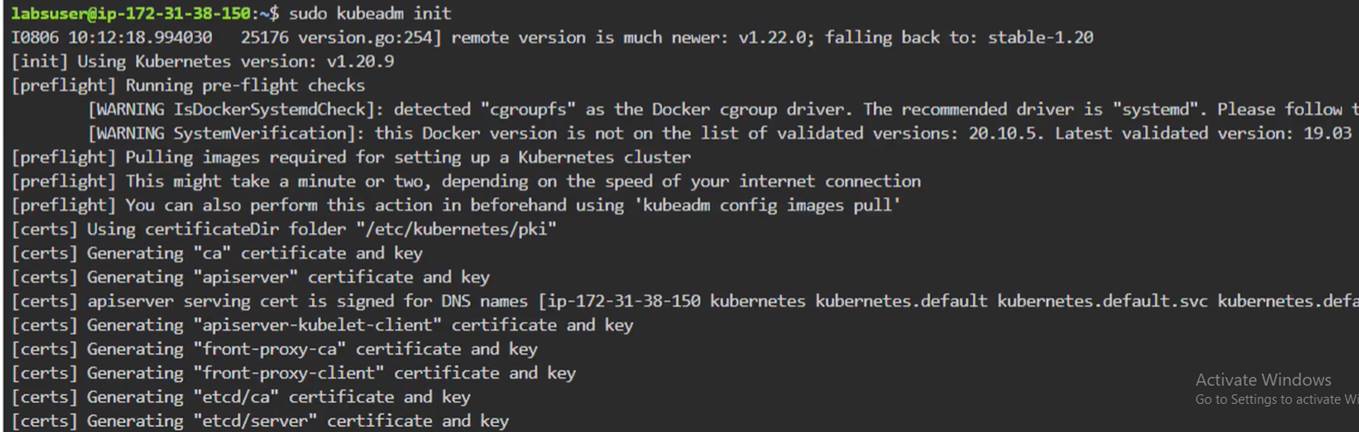

Flags: Refers to the optional flags. Initialize the cluster using this command:

sudo kubeadm init

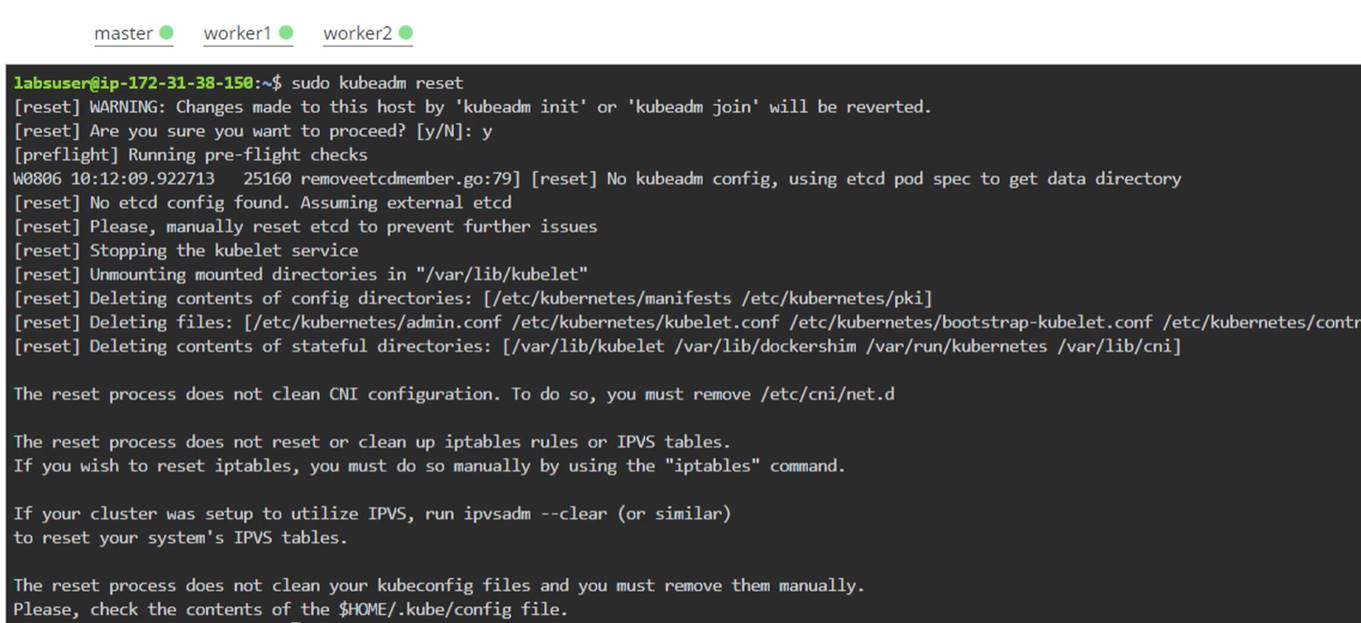

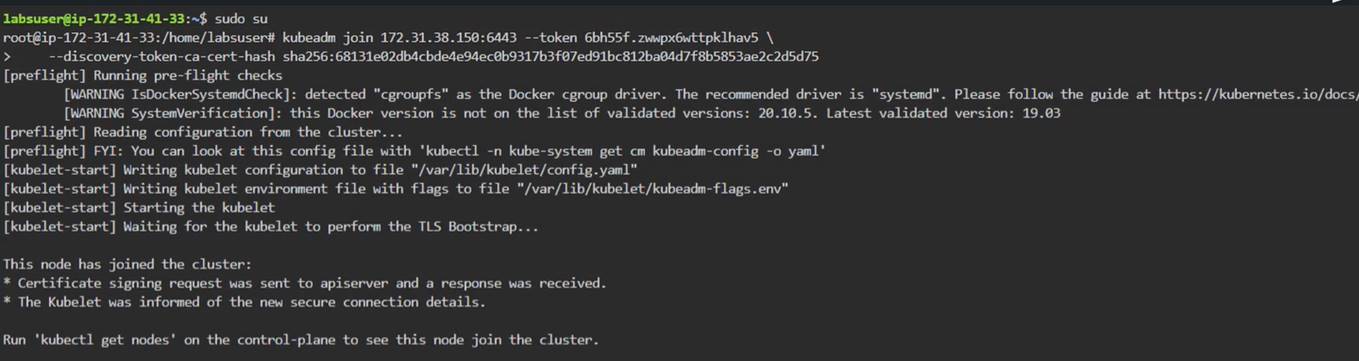

10.12. Demo - Exploring a Kube Cluster

In the CMD, three window,

- master

- worker1

- worker2

In the “master” window, run the following command

In the “worker” window, run the following command

Now go again “master” window,

10.13. Practice Project

1. Deploy Angular Application in Docker Container

Deploy the Angular application in Docker. The Angular application should be built with the Angular CLI along with Docker Compose for development and production.

Problem Statement Scenario:

HTQual Technology Solutions hired you as a MEAN Stack Developer. The organization decided to implement DevOps to develop and deliver the products. Since HTQual is an Agile organization, they follow Scrum methodology to develop the projects incrementally. The Company decided to develop their website on Mean stack. Since you are the MEAN stack developer, you have to demonstrate that deploying an Angular application on Docker is always a best approach to develop a project and test it incrementally. You agreed upon the following:

- Setting up an image for code development

- Build the application in Docker and host it in Docker Hub

- List the advantages, disadvantages, and document the tasks involved

Your goal is to demonstrate the Angular application and run it in a Docker container.

You must use the following tools:

- Docker – To package the application in a Docker container

- Node.js – To support the Angular application with the required node modules.

- Angular CLI – To execute and bundle the dependencies together.

- Linux (Ubuntu) – As a base operating system to start and execute the project.

Following requirements should be met:

- Document the step-by-step process from the initial installation to the final production.

- Run the Angular application successfully in the Docker container.

- Use Docker Compose to manage the Angular application running inside the Docker container.

2. Deployment of WordPress Application on Kubernetes

Use Jenkins to deploy a WordPress application on Kubernetes.

Description:

While developing a highly scalable application, real challenges come into picture during the deployment of that application into production or real time data scenarios. One of the biggest drawbacks for any product after development is having the application stuck while working in normal case scenarios in a real time production environment.

Your organization is adopting the DevOps methodology and in order to integrate CI into your existing developmental workflow, there’s a need to automate the deployment of a wordpress application in the production environment using a CI tool like Jenkins such that it can be load balanced and auto scaled easily depending on the traffic it can handle.

Kubernetes has become essential for standardising application components across complex development and production. With the increasing complexity of application ecosystems and the growing popularity of Kubernetes, tools that help manage resources within Kubernetes clusters have become essential.

Considering the organizational requirement, you are asked to deploy the wordpress application on a Kubernetes cluster while utilising the docker image for the wordpress application in the production environment.

Tools required: Git, GitHub, Jenkins, Docker and Kubernetes

Expected Deliverables:

- Establish a connection between Jenkins and Kubernetes

- The build job should compile and run the code to deploy the application on Kubernetes.

Comment / Reply From

You May Also Like

Popular Posts

Stay Connected

Newsletter

Subscribe to our mailing list to get the new updates!